How AI Chatbots are built (behind the scenes look)

Understand what makes up 90%+ of AI chat apps

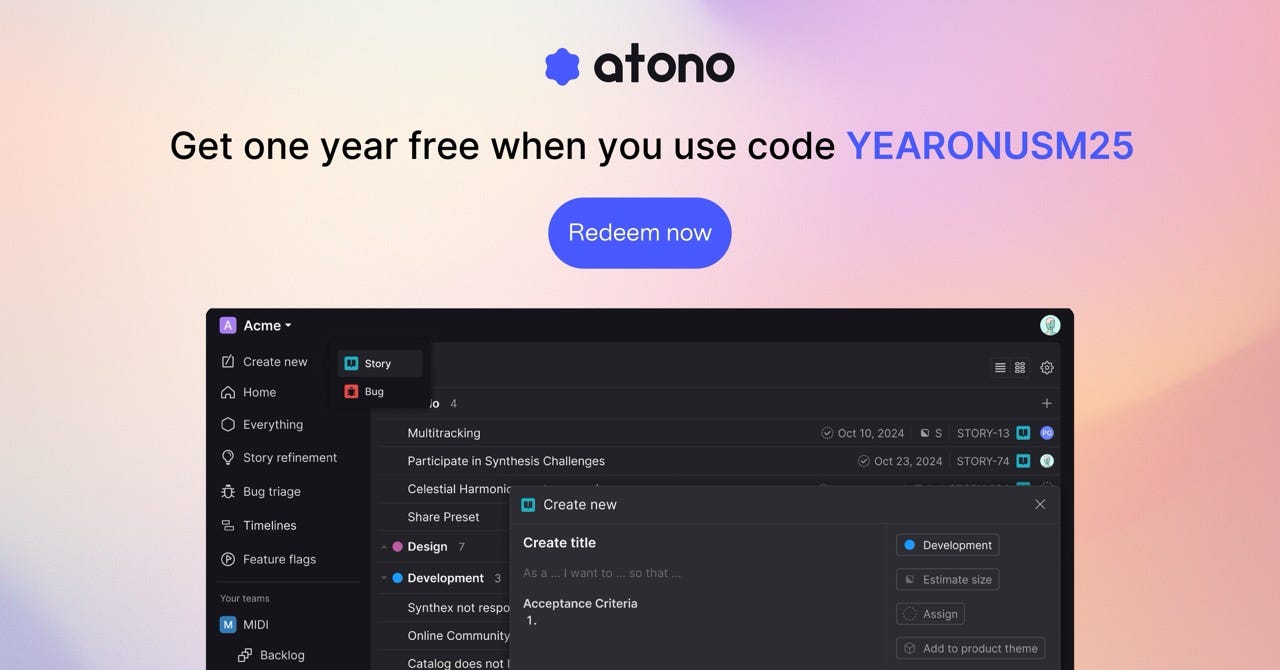

📣 Let’s rethink the way we build (Sponsor)

Software development has come a long way in the past decade—so why haven’t our tools?

Let go of the clutter, distractions, and stress with Atono, so your team can focus on what matters: building better software together.

A clean, focused experience: Minimalist UI with in-context tools ensuring everything is wher…